Latent Space Exploration with Randomized Sparse Mixed Scale Autoencoders, regularized by the availability of image labels¶

Authors: Eric Roberts and Petrus Zwart

E-mail: PHZwart@lbl.gov, EJRoberts@lbl.gov ___

This notebook highlights some basic functionality with the pyMSDtorch package.

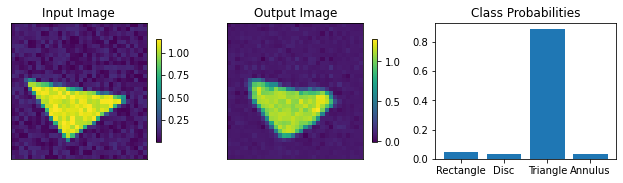

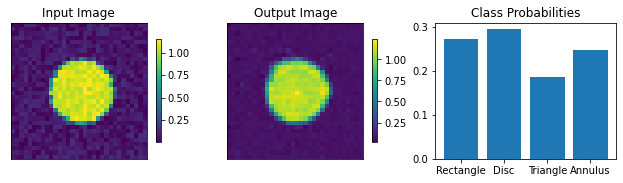

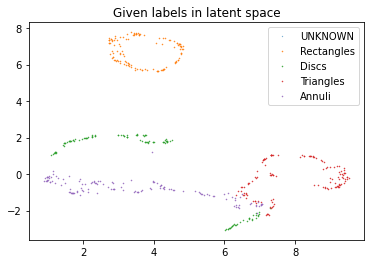

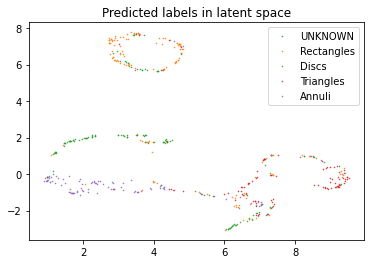

In this notebook we setup autoencoders, with the goal to explore the latent space it generates. In this case however, we will guide the formation of the latent space by including labels to specific images.

The autoencoders we use are based on randomly construct convolutional neural networks in which we can control the number of parameters it contains. This type of control can be beneficial when the amount of data on which one can train a network is not very voluminous, as it allows for better handles on overfitting.

The constructed latent space can be used for unsupervised and supervised exploration methods. In our limited experience, the classifiers that are trained come out of the data are reasonable, but can be improved upon using classic classification methods, as shown further.

[1]:

import numpy as np

import torch

import torch.nn as nn

import torch.optim as optim

from pyMSDtorch.core import helpers

from pyMSDtorch.core import train_scripts

from pyMSDtorch.core.networks import SparseNet

from pyMSDtorch.test_data.twoD import random_shapes

from pyMSDtorch.core.utils import latent_space_viewer

from pyMSDtorch.viz_tools import plots

from pyMSDtorch.viz_tools import plot_autoencoder_image_classification as paic

import matplotlib.pyplot as plt

from torch.utils.data import DataLoader, TensorDataset

import einops

import umap

<frozen importlib._bootstrap>:219: RuntimeWarning: scipy._lib.messagestream.MessageStream size changed, may indicate binary incompatibility. Expected 56 from C header, got 64 from PyObject

Create some data first

[2]:

N_train = 500

N_labeled = 100

N_test = 500

noise_level = 0.150

Nxy = 32

train_data = random_shapes.build_random_shape_set_numpy(n_imgs=N_train,

noise_level=noise_level,

n_xy=Nxy)

test_data = random_shapes.build_random_shape_set_numpy(n_imgs=N_test,

noise_level=noise_level,

n_xy=Nxy)

[3]:

plots.plot_shapes_data_numpy(train_data)

[4]:

which_one = "Noisy" #"GroundTruth"

batch_size = 100

loader_params = {'batch_size': batch_size,

'shuffle': True}

train_imgs = torch.Tensor(train_data[which_one]).unsqueeze(1)

train_labels = torch.Tensor(train_data["Label"]).unsqueeze(1)-1

train_labels[N_labeled:]=-1 # remove some labels to highlight 'mixed' training

Ttrain_data = TensorDataset(train_imgs,train_labels)

train_loader = DataLoader(Ttrain_data, **loader_params)

loader_params = {'batch_size': batch_size,

'shuffle': False}

test_images = torch.Tensor(test_data[which_one]).unsqueeze(1)

test_labels = torch.Tensor(test_data["Label"]).unsqueeze(1)-1

Ttest_data = TensorDataset( test_images, test_labels )

test_loader = DataLoader(Ttest_data, **loader_params)

Lets build an autoencoder first.

There are a number of parameters to play with that impact the size of the network:

- latent_shape: the spatial footprint of the image in latent space.

I don't recommend going below 4x4, because it interferes with the

dilation choices. This is a bit of a annoyiong feature, we need to fix that.

Its on the list.

- out_channels: the number of channels of the latent image. Determines the

dimension of latent space: (channels,latent_shape[-2], latent_shape[-1])

- depth: the depth of the random sparse convolutional encoder / decoder

- hidden channels: The number of channels put out per convolution.

- max_degree / min_degree : This determines how many connections you have per node.

Other parameters do not impact the size of the network dramatically / at all:

- in_shape: determined by the input shape of the image.

- dilations: the maximum dilation should not exceed the smallest image dimension.

- alpha_range: determines the type of graphs (wide vs skinny). When alpha is large,

the chances for skinny graphs to be generated increases.

We don't know which parameter choice is best, so we randomize it's choice.

- gamma_range: no effect unless the maximum degree and min_degree are far apart.

We don't know which parameter choice is best, so we randomize it's choice.

- pIL,pLO,IO: keep as is.

- stride_base: make sure your latent image size can be generated from the in_shape

by repeated division of with this number.

For the classification, specify the number of output classes. Here we work with 4 shapes, so set it to 4. The dropout rate governs the dropout layers in the classifier part of the networks and doesn’t affect the autoencoder part.

[5]:

autoencoder = SparseNet.SparseAEC(in_shape=(32, 32),

latent_shape=(4, 4),

out_classes=4,

depth=40,

dilations=[1,2,3],

hidden_channels=3,

out_channels=2,

alpha_range=(0.5, 1.0),

gamma_range=(0.0, 0.5),

max_degree=10, min_degree=10,

pIL=0.15,

pLO=0.15,

IO=False,

stride_base=2,

dropout_rate=0.05,)

pytorch_total_params = helpers.count_parameters(autoencoder)

print( "Number of parameters:", pytorch_total_params)

Number of parameters: 372778

We define two optimizers, one for autoencoding and one for classification. They will be minimized consequetively instead of building a single sum of targets. This avoids choosing the right weight. The mini-epochs are the number of epochs it passes over the whole data set to optimize a single atrget function. The autoencoder is done first.

[6]:

torch.cuda.empty_cache()

learning_rate = 1e-3

num_epochs=50

criterion_AE = nn.MSELoss()

optimizer_AE = optim.Adam(autoencoder.parameters(), lr=learning_rate)

criterion_label = nn.CrossEntropyLoss(ignore_index=-1)

optimizer_label = optim.Adam(autoencoder.parameters(), lr=learning_rate)

rv = train_scripts.autoencode_and_classify_training(net=autoencoder.to('cuda:0'),

trainloader=train_loader,

validationloader=test_loader,

macro_epochs=num_epochs,

mini_epochs=5,

criteria_autoencode=criterion_AE,

minimizer_autoencode=optimizer_AE,

criteria_classify=criterion_label,

minimizer_classify=optimizer_label,

device="cuda:0",

show=1,

clip_value=100.0)

Epoch 1, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 2.5833e-01 | Validation Loss : 1.9714e-01

Training CC : 0.1946 | Validation CC : 0.4514

** Classification Losses **

Training Loss : 1.4542e+00 | Validation Loss : 1.4837e+00

Training F1 Macro: 0.1412 | Validation F1 Macro : 0.1729

Training F1 Micro: 0.2659 | Validation F1 Micro : 0.2720

Epoch 1, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.7494e-01 | Validation Loss : 1.3841e-01

Training CC : 0.5357 | Validation CC : 0.6173

** Classification Losses **

Training Loss : 1.4567e+00 | Validation Loss : 1.5082e+00

Training F1 Macro: 0.1078 | Validation F1 Macro : 0.1527

Training F1 Micro: 0.2518 | Validation F1 Micro : 0.2580

Epoch 1, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.2557e-01 | Validation Loss : 1.0217e-01

Training CC : 0.6537 | Validation CC : 0.6885

** Classification Losses **

Training Loss : 1.5433e+00 | Validation Loss : 1.5206e+00

Training F1 Macro: 0.1070 | Validation F1 Macro : 0.1454

Training F1 Micro: 0.2443 | Validation F1 Micro : 0.2580

Epoch 1, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 9.3322e-02 | Validation Loss : 7.6507e-02

Training CC : 0.7179 | Validation CC : 0.7457

** Classification Losses **

Training Loss : 1.5297e+00 | Validation Loss : 1.5298e+00

Training F1 Macro: 0.1070 | Validation F1 Macro : 0.1338

Training F1 Micro: 0.2436 | Validation F1 Micro : 0.2480

Epoch 1, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.9479e-02 | Validation Loss : 5.7264e-02

Training CC : 0.7750 | Validation CC : 0.8002

** Classification Losses **

Training Loss : 1.5637e+00 | Validation Loss : 1.5268e+00

Training F1 Macro: 0.0887 | Validation F1 Macro : 0.1491

Training F1 Micro: 0.2143 | Validation F1 Micro : 0.2560

Epoch 2, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 5.9107e-02 | Validation Loss : 6.3066e-02

Training CC : 0.8004 | Validation CC : 0.7721

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.4098e+00 | Validation Loss : 1.2859e+00

Training F1 Macro: 0.1368 | Validation F1 Macro : 0.2642

Training F1 Micro: 0.2651 | Validation F1 Micro : 0.3360

Epoch 2, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 6.6844e-02 | Validation Loss : 7.0438e-02

Training CC : 0.7638 | Validation CC : 0.7362

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.1472e+00 | Validation Loss : 1.1916e+00

Training F1 Macro: 0.4144 | Validation F1 Macro : 0.3684

Training F1 Micro: 0.4584 | Validation F1 Micro : 0.4100

Epoch 2, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 7.1455e-02 | Validation Loss : 7.2273e-02

Training CC : 0.7415 | Validation CC : 0.7272

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.0518e+00 | Validation Loss : 1.1435e+00

Training F1 Macro: 0.5795 | Validation F1 Macro : 0.4515

Training F1 Micro: 0.5803 | Validation F1 Micro : 0.4740

Epoch 2, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 7.3640e-02 | Validation Loss : 7.4967e-02

Training CC : 0.7305 | Validation CC : 0.7132

** Classification Losses ** <---- Now Optimizing

Training Loss : 9.1016e-01 | Validation Loss : 1.0982e+00

Training F1 Macro: 0.6049 | Validation F1 Macro : 0.5148

Training F1 Micro: 0.6839 | Validation F1 Micro : 0.5260

Epoch 2, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 7.5467e-02 | Validation Loss : 7.6074e-02

Training CC : 0.7207 | Validation CC : 0.7064

** Classification Losses ** <---- Now Optimizing

Training Loss : 8.3661e-01 | Validation Loss : 1.0611e+00

Training F1 Macro: 0.7700 | Validation F1 Macro : 0.5408

Training F1 Micro: 0.7678 | Validation F1 Micro : 0.5460

Epoch 3, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.2492e-02 | Validation Loss : 4.8532e-02

Training CC : 0.7748 | Validation CC : 0.8198

** Classification Losses **

Training Loss : 8.0198e-01 | Validation Loss : 1.1293e+00

Training F1 Macro: 0.7082 | Validation F1 Macro : 0.4957

Training F1 Micro: 0.7186 | Validation F1 Micro : 0.5080

Epoch 3, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 4.3980e-02 | Validation Loss : 3.8473e-02

Training CC : 0.8411 | Validation CC : 0.8520

** Classification Losses **

Training Loss : 9.4166e-01 | Validation Loss : 1.1996e+00

Training F1 Macro: 0.5814 | Validation F1 Macro : 0.3834

Training F1 Micro: 0.6267 | Validation F1 Micro : 0.4200

Epoch 3, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 3.5708e-02 | Validation Loss : 3.3619e-02

Training CC : 0.8654 | Validation CC : 0.8676

** Classification Losses **

Training Loss : 1.0400e+00 | Validation Loss : 1.2227e+00

Training F1 Macro: 0.4950 | Validation F1 Macro : 0.3565

Training F1 Micro: 0.5396 | Validation F1 Micro : 0.4020

Epoch 3, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 3.1166e-02 | Validation Loss : 3.1066e-02

Training CC : 0.8803 | Validation CC : 0.8788

** Classification Losses **

Training Loss : 1.1217e+00 | Validation Loss : 1.2409e+00

Training F1 Macro: 0.4504 | Validation F1 Macro : 0.3344

Training F1 Micro: 0.4988 | Validation F1 Micro : 0.3880

Epoch 3, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 2.8666e-02 | Validation Loss : 2.9292e-02

Training CC : 0.8904 | Validation CC : 0.8869

** Classification Losses **

Training Loss : 1.0885e+00 | Validation Loss : 1.2616e+00

Training F1 Macro: 0.4854 | Validation F1 Macro : 0.3249

Training F1 Micro: 0.5238 | Validation F1 Micro : 0.3840

Epoch 4, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 2.7689e-02 | Validation Loss : 3.1067e-02

Training CC : 0.8944 | Validation CC : 0.8795

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.1196e+00 | Validation Loss : 1.1489e+00

Training F1 Macro: 0.4359 | Validation F1 Macro : 0.4688

Training F1 Micro: 0.5154 | Validation F1 Micro : 0.4900

Epoch 4, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 3.1510e-02 | Validation Loss : 3.6835e-02

Training CC : 0.8790 | Validation CC : 0.8557

** Classification Losses ** <---- Now Optimizing

Training Loss : 9.0130e-01 | Validation Loss : 1.0439e+00

Training F1 Macro: 0.7237 | Validation F1 Macro : 0.5512

Training F1 Micro: 0.7267 | Validation F1 Micro : 0.5600

Epoch 4, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 3.6501e-02 | Validation Loss : 3.8769e-02

Training CC : 0.8585 | Validation CC : 0.8474

** Classification Losses ** <---- Now Optimizing

Training Loss : 7.4278e-01 | Validation Loss : 1.0212e+00

Training F1 Macro: 0.8133 | Validation F1 Macro : 0.5642

Training F1 Micro: 0.8138 | Validation F1 Micro : 0.5640

Epoch 4, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 3.7923e-02 | Validation Loss : 3.9381e-02

Training CC : 0.8527 | Validation CC : 0.8445

** Classification Losses ** <---- Now Optimizing

Training Loss : 6.4941e-01 | Validation Loss : 9.9847e-01

Training F1 Macro: 0.8295 | Validation F1 Macro : 0.5819

Training F1 Micro: 0.8402 | Validation F1 Micro : 0.5800

Epoch 4, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 3.9013e-02 | Validation Loss : 4.0582e-02

Training CC : 0.8479 | Validation CC : 0.8393

** Classification Losses ** <---- Now Optimizing

Training Loss : 6.9050e-01 | Validation Loss : 9.9734e-01

Training F1 Macro: 0.7857 | Validation F1 Macro : 0.5757

Training F1 Micro: 0.7938 | Validation F1 Micro : 0.5860

Epoch 5, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 3.3743e-02 | Validation Loss : 3.1420e-02

Training CC : 0.8697 | Validation CC : 0.8789

** Classification Losses **

Training Loss : 6.3096e-01 | Validation Loss : 1.0156e+00

Training F1 Macro: 0.8209 | Validation F1 Macro : 0.5716

Training F1 Micro: 0.8429 | Validation F1 Micro : 0.5840

Epoch 5, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 2.8137e-02 | Validation Loss : 2.8415e-02

Training CC : 0.8927 | Validation CC : 0.8895

** Classification Losses **

Training Loss : 6.2693e-01 | Validation Loss : 1.0242e+00

Training F1 Macro: 0.8346 | Validation F1 Macro : 0.5789

Training F1 Micro: 0.8430 | Validation F1 Micro : 0.5900

Epoch 5, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 2.5760e-02 | Validation Loss : 2.6215e-02

Training CC : 0.9017 | Validation CC : 0.8982

** Classification Losses **

Training Loss : 7.1689e-01 | Validation Loss : 1.0248e+00

Training F1 Macro: 0.8085 | Validation F1 Macro : 0.5630

Training F1 Micro: 0.8148 | Validation F1 Micro : 0.5760

Epoch 5, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 2.3933e-02 | Validation Loss : 2.4485e-02

Training CC : 0.9091 | Validation CC : 0.9046

** Classification Losses **

Training Loss : 7.3269e-01 | Validation Loss : 1.0355e+00

Training F1 Macro: 0.8173 | Validation F1 Macro : 0.5564

Training F1 Micro: 0.8179 | Validation F1 Micro : 0.5680

Epoch 5, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 2.2497e-02 | Validation Loss : 2.3630e-02

Training CC : 0.9148 | Validation CC : 0.9083

** Classification Losses **

Training Loss : 7.4537e-01 | Validation Loss : 1.0341e+00

Training F1 Macro: 0.8470 | Validation F1 Macro : 0.5483

Training F1 Micro: 0.8414 | Validation F1 Micro : 0.5560

Epoch 6, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 2.1940e-02 | Validation Loss : 2.4573e-02

Training CC : 0.9168 | Validation CC : 0.9045

** Classification Losses ** <---- Now Optimizing

Training Loss : 7.0182e-01 | Validation Loss : 9.8734e-01

Training F1 Macro: 0.8343 | Validation F1 Macro : 0.5737

Training F1 Micro: 0.8300 | Validation F1 Micro : 0.5840

Epoch 6, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 2.3738e-02 | Validation Loss : 2.6494e-02

Training CC : 0.9096 | Validation CC : 0.8966

** Classification Losses ** <---- Now Optimizing

Training Loss : 5.7709e-01 | Validation Loss : 9.7654e-01

Training F1 Macro: 0.8604 | Validation F1 Macro : 0.5669

Training F1 Micro: 0.8608 | Validation F1 Micro : 0.5740

Epoch 6, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 2.5391e-02 | Validation Loss : 2.7516e-02

Training CC : 0.9030 | Validation CC : 0.8923

** Classification Losses ** <---- Now Optimizing

Training Loss : 5.5142e-01 | Validation Loss : 9.4373e-01

Training F1 Macro: 0.7920 | Validation F1 Macro : 0.6263

Training F1 Micro: 0.8294 | Validation F1 Micro : 0.6320

Epoch 6, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 2.6273e-02 | Validation Loss : 2.8650e-02

Training CC : 0.8990 | Validation CC : 0.8876

** Classification Losses ** <---- Now Optimizing

Training Loss : 5.3247e-01 | Validation Loss : 9.5497e-01

Training F1 Macro: 0.8407 | Validation F1 Macro : 0.6279

Training F1 Micro: 0.8674 | Validation F1 Micro : 0.6220

Epoch 6, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 2.7523e-02 | Validation Loss : 2.9696e-02

Training CC : 0.8941 | Validation CC : 0.8833

** Classification Losses ** <---- Now Optimizing

Training Loss : 4.0847e-01 | Validation Loss : 9.5333e-01

Training F1 Macro: 0.9402 | Validation F1 Macro : 0.6296

Training F1 Micro: 0.9391 | Validation F1 Micro : 0.6200

Epoch 7, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 2.5244e-02 | Validation Loss : 2.4720e-02

Training CC : 0.9038 | Validation CC : 0.9037

** Classification Losses **

Training Loss : 3.8517e-01 | Validation Loss : 9.4221e-01

Training F1 Macro: 0.9235 | Validation F1 Macro : 0.6441

Training F1 Micro: 0.9294 | Validation F1 Micro : 0.6440

Epoch 7, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 2.2288e-02 | Validation Loss : 2.2985e-02

Training CC : 0.9157 | Validation CC : 0.9109

** Classification Losses **

Training Loss : 4.7860e-01 | Validation Loss : 9.3218e-01

Training F1 Macro: 0.8608 | Validation F1 Macro : 0.6367

Training F1 Micro: 0.9125 | Validation F1 Micro : 0.6400

Epoch 7, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 2.0640e-02 | Validation Loss : 2.2053e-02

Training CC : 0.9219 | Validation CC : 0.9149

** Classification Losses **

Training Loss : 4.6922e-01 | Validation Loss : 9.5766e-01

Training F1 Macro: 0.9025 | Validation F1 Macro : 0.5948

Training F1 Micro: 0.9113 | Validation F1 Micro : 0.6040

Epoch 7, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.9462e-02 | Validation Loss : 2.0969e-02

Training CC : 0.9267 | Validation CC : 0.9191

** Classification Losses **

Training Loss : 4.8103e-01 | Validation Loss : 9.5177e-01

Training F1 Macro: 0.8614 | Validation F1 Macro : 0.6067

Training F1 Micro: 0.8945 | Validation F1 Micro : 0.6160

Epoch 7, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.8389e-02 | Validation Loss : 2.0196e-02

Training CC : 0.9309 | Validation CC : 0.9225

** Classification Losses **

Training Loss : 5.3990e-01 | Validation Loss : 9.8757e-01

Training F1 Macro: 0.8741 | Validation F1 Macro : 0.6020

Training F1 Micro: 0.8875 | Validation F1 Micro : 0.6060

Epoch 8, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.7780e-02 | Validation Loss : 2.0791e-02

Training CC : 0.9331 | Validation CC : 0.9201

** Classification Losses ** <---- Now Optimizing

Training Loss : 4.8074e-01 | Validation Loss : 9.3555e-01

Training F1 Macro: 0.8894 | Validation F1 Macro : 0.6286

Training F1 Micro: 0.8711 | Validation F1 Micro : 0.6280

Epoch 8, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.8955e-02 | Validation Loss : 2.2192e-02

Training CC : 0.9284 | Validation CC : 0.9145

** Classification Losses ** <---- Now Optimizing

Training Loss : 4.1271e-01 | Validation Loss : 9.3977e-01

Training F1 Macro: 0.8991 | Validation F1 Macro : 0.6416

Training F1 Micro: 0.9057 | Validation F1 Micro : 0.6340

Epoch 8, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 2.0248e-02 | Validation Loss : 2.2897e-02

Training CC : 0.9233 | Validation CC : 0.9116

** Classification Losses ** <---- Now Optimizing

Training Loss : 3.6204e-01 | Validation Loss : 9.2149e-01

Training F1 Macro: 0.9219 | Validation F1 Macro : 0.6617

Training F1 Micro: 0.9205 | Validation F1 Micro : 0.6540

Epoch 8, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 2.1133e-02 | Validation Loss : 2.3551e-02

Training CC : 0.9201 | Validation CC : 0.9089

** Classification Losses ** <---- Now Optimizing

Training Loss : 3.3710e-01 | Validation Loss : 9.3359e-01

Training F1 Macro: 0.9039 | Validation F1 Macro : 0.6330

Training F1 Micro: 0.9092 | Validation F1 Micro : 0.6280

Epoch 8, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 2.2090e-02 | Validation Loss : 2.4345e-02

Training CC : 0.9166 | Validation CC : 0.9057

** Classification Losses ** <---- Now Optimizing

Training Loss : 2.3895e-01 | Validation Loss : 9.5366e-01

Training F1 Macro: 0.9673 | Validation F1 Macro : 0.6222

Training F1 Micro: 0.9599 | Validation F1 Micro : 0.6180

Epoch 9, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 2.0796e-02 | Validation Loss : 2.1356e-02

Training CC : 0.9217 | Validation CC : 0.9177

** Classification Losses **

Training Loss : 2.6027e-01 | Validation Loss : 9.2510e-01

Training F1 Macro: 0.9396 | Validation F1 Macro : 0.6262

Training F1 Micro: 0.9318 | Validation F1 Micro : 0.6260

Epoch 9, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.8047e-02 | Validation Loss : 2.0061e-02

Training CC : 0.9319 | Validation CC : 0.9228

** Classification Losses **

Training Loss : 2.2495e-01 | Validation Loss : 9.0182e-01

Training F1 Macro: 0.9634 | Validation F1 Macro : 0.6524

Training F1 Micro: 0.9699 | Validation F1 Micro : 0.6520

Epoch 9, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.6831e-02 | Validation Loss : 1.9079e-02

Training CC : 0.9368 | Validation CC : 0.9269

** Classification Losses **

Training Loss : 2.6530e-01 | Validation Loss : 8.9400e-01

Training F1 Macro: 0.9469 | Validation F1 Macro : 0.6384

Training F1 Micro: 0.9423 | Validation F1 Micro : 0.6420

Epoch 9, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.5871e-02 | Validation Loss : 1.8142e-02

Training CC : 0.9404 | Validation CC : 0.9305

** Classification Losses **

Training Loss : 2.7760e-01 | Validation Loss : 9.0853e-01

Training F1 Macro: 0.9513 | Validation F1 Macro : 0.6179

Training F1 Micro: 0.9489 | Validation F1 Micro : 0.6200

Epoch 9, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.5164e-02 | Validation Loss : 1.7539e-02

Training CC : 0.9436 | Validation CC : 0.9331

** Classification Losses **

Training Loss : 3.1673e-01 | Validation Loss : 9.1956e-01

Training F1 Macro: 0.9232 | Validation F1 Macro : 0.6268

Training F1 Micro: 0.9280 | Validation F1 Micro : 0.6280

Epoch 10, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.4578e-02 | Validation Loss : 1.7977e-02

Training CC : 0.9456 | Validation CC : 0.9313

** Classification Losses ** <---- Now Optimizing

Training Loss : 2.6310e-01 | Validation Loss : 9.4249e-01

Training F1 Macro: 0.9633 | Validation F1 Macro : 0.6326

Training F1 Micro: 0.9600 | Validation F1 Micro : 0.6280

Epoch 10, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.5383e-02 | Validation Loss : 1.8928e-02

Training CC : 0.9423 | Validation CC : 0.9275

** Classification Losses ** <---- Now Optimizing

Training Loss : 3.1046e-01 | Validation Loss : 9.0960e-01

Training F1 Macro: 0.8953 | Validation F1 Macro : 0.6527

Training F1 Micro: 0.9013 | Validation F1 Micro : 0.6480

Epoch 10, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.6955e-02 | Validation Loss : 1.9794e-02

Training CC : 0.9373 | Validation CC : 0.9240

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.8785e-01 | Validation Loss : 9.0399e-01

Training F1 Macro: 0.9519 | Validation F1 Macro : 0.6345

Training F1 Micro: 0.9552 | Validation F1 Micro : 0.6380

Epoch 10, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.7438e-02 | Validation Loss : 2.0332e-02

Training CC : 0.9346 | Validation CC : 0.9218

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.9113e-01 | Validation Loss : 9.2813e-01

Training F1 Macro: 0.9222 | Validation F1 Macro : 0.6069

Training F1 Micro: 0.9236 | Validation F1 Micro : 0.6060

Epoch 10, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.7993e-02 | Validation Loss : 2.0730e-02

Training CC : 0.9326 | Validation CC : 0.9202

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.8799e-01 | Validation Loss : 9.0754e-01

Training F1 Macro: 0.9206 | Validation F1 Macro : 0.6161

Training F1 Micro: 0.9205 | Validation F1 Micro : 0.6220

Epoch 11, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.7403e-02 | Validation Loss : 1.8835e-02

Training CC : 0.9364 | Validation CC : 0.9279

** Classification Losses **

Training Loss : 2.6457e-01 | Validation Loss : 8.8831e-01

Training F1 Macro: 0.8917 | Validation F1 Macro : 0.6314

Training F1 Micro: 0.8893 | Validation F1 Micro : 0.6320

Epoch 11, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.5003e-02 | Validation Loss : 1.7768e-02

Training CC : 0.9438 | Validation CC : 0.9321

** Classification Losses **

Training Loss : 1.8082e-01 | Validation Loss : 8.8316e-01

Training F1 Macro: 0.9293 | Validation F1 Macro : 0.6331

Training F1 Micro: 0.9375 | Validation F1 Micro : 0.6380

Epoch 11, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.4159e-02 | Validation Loss : 1.6959e-02

Training CC : 0.9474 | Validation CC : 0.9354

** Classification Losses **

Training Loss : 2.0158e-01 | Validation Loss : 8.6954e-01

Training F1 Macro: 0.9375 | Validation F1 Macro : 0.6380

Training F1 Micro: 0.9474 | Validation F1 Micro : 0.6460

Epoch 11, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.3952e-02 | Validation Loss : 1.6245e-02

Training CC : 0.9492 | Validation CC : 0.9380

** Classification Losses **

Training Loss : 2.5369e-01 | Validation Loss : 8.8022e-01

Training F1 Macro: 0.8668 | Validation F1 Macro : 0.6351

Training F1 Micro: 0.8916 | Validation F1 Micro : 0.6380

Epoch 11, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.2904e-02 | Validation Loss : 1.5950e-02

Training CC : 0.9524 | Validation CC : 0.9396

** Classification Losses **

Training Loss : 1.8340e-01 | Validation Loss : 8.6461e-01

Training F1 Macro: 0.9492 | Validation F1 Macro : 0.6339

Training F1 Micro: 0.9501 | Validation F1 Micro : 0.6380

Epoch 12, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.2211e-02 | Validation Loss : 1.6111e-02

Training CC : 0.9546 | Validation CC : 0.9390

** Classification Losses ** <---- Now Optimizing

Training Loss : 2.2418e-01 | Validation Loss : 8.9275e-01

Training F1 Macro: 0.8881 | Validation F1 Macro : 0.6358

Training F1 Micro: 0.9305 | Validation F1 Micro : 0.6340

Epoch 12, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.2899e-02 | Validation Loss : 1.6743e-02

Training CC : 0.9522 | Validation CC : 0.9365

** Classification Losses ** <---- Now Optimizing

Training Loss : 2.0333e-01 | Validation Loss : 9.3081e-01

Training F1 Macro: 0.9174 | Validation F1 Macro : 0.6288

Training F1 Micro: 0.9166 | Validation F1 Micro : 0.6240

Epoch 12, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.3326e-02 | Validation Loss : 1.7252e-02

Training CC : 0.9503 | Validation CC : 0.9344

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.8194e-01 | Validation Loss : 8.7050e-01

Training F1 Macro: 0.9065 | Validation F1 Macro : 0.6398

Training F1 Micro: 0.9100 | Validation F1 Micro : 0.6440

Epoch 12, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.4215e-02 | Validation Loss : 1.8197e-02

Training CC : 0.9469 | Validation CC : 0.9307

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.9415e-01 | Validation Loss : 8.7652e-01

Training F1 Macro: 0.9131 | Validation F1 Macro : 0.6182

Training F1 Micro: 0.9005 | Validation F1 Micro : 0.6220

Epoch 12, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.5127e-02 | Validation Loss : 1.8726e-02

Training CC : 0.9434 | Validation CC : 0.9287

** Classification Losses ** <---- Now Optimizing

Training Loss : 2.0680e-01 | Validation Loss : 8.7771e-01

Training F1 Macro: 0.8930 | Validation F1 Macro : 0.6297

Training F1 Micro: 0.9006 | Validation F1 Micro : 0.6300

Epoch 13, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.4305e-02 | Validation Loss : 1.6714e-02

Training CC : 0.9470 | Validation CC : 0.9365

** Classification Losses **

Training Loss : 2.1443e-01 | Validation Loss : 8.6231e-01

Training F1 Macro: 0.8839 | Validation F1 Macro : 0.6399

Training F1 Micro: 0.8928 | Validation F1 Micro : 0.6440

Epoch 13, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.3025e-02 | Validation Loss : 1.5881e-02

Training CC : 0.9522 | Validation CC : 0.9397

** Classification Losses **

Training Loss : 1.7527e-01 | Validation Loss : 8.4568e-01

Training F1 Macro: 0.9115 | Validation F1 Macro : 0.6464

Training F1 Micro: 0.9205 | Validation F1 Micro : 0.6500

Epoch 13, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.2185e-02 | Validation Loss : 1.5217e-02

Training CC : 0.9553 | Validation CC : 0.9422

** Classification Losses **

Training Loss : 1.6836e-01 | Validation Loss : 8.3084e-01

Training F1 Macro: 0.9136 | Validation F1 Macro : 0.6637

Training F1 Micro: 0.9199 | Validation F1 Micro : 0.6680

Epoch 13, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.1708e-02 | Validation Loss : 1.4811e-02

Training CC : 0.9574 | Validation CC : 0.9439

** Classification Losses **

Training Loss : 1.5179e-01 | Validation Loss : 8.5556e-01

Training F1 Macro: 0.9303 | Validation F1 Macro : 0.6488

Training F1 Micro: 0.9267 | Validation F1 Micro : 0.6520

Epoch 13, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.0926e-02 | Validation Loss : 1.4488e-02

Training CC : 0.9596 | Validation CC : 0.9454

** Classification Losses **

Training Loss : 1.6101e-01 | Validation Loss : 8.4275e-01

Training F1 Macro: 0.9315 | Validation F1 Macro : 0.6548

Training F1 Micro: 0.9523 | Validation F1 Micro : 0.6600

Epoch 14, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.0402e-02 | Validation Loss : 1.4740e-02

Training CC : 0.9614 | Validation CC : 0.9444

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.7058e-01 | Validation Loss : 8.3775e-01

Training F1 Macro: 0.9381 | Validation F1 Macro : 0.6698

Training F1 Micro: 0.9402 | Validation F1 Micro : 0.6740

Epoch 14, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.1162e-02 | Validation Loss : 1.5246e-02

Training CC : 0.9591 | Validation CC : 0.9424

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.9570e-01 | Validation Loss : 8.7155e-01

Training F1 Macro: 0.8464 | Validation F1 Macro : 0.6625

Training F1 Micro: 0.9012 | Validation F1 Micro : 0.6660

Epoch 14, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.1356e-02 | Validation Loss : 1.5513e-02

Training CC : 0.9579 | Validation CC : 0.9413

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.8357e-01 | Validation Loss : 8.6651e-01

Training F1 Macro: 0.9284 | Validation F1 Macro : 0.6500

Training F1 Micro: 0.9274 | Validation F1 Micro : 0.6540

Epoch 14, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.1680e-02 | Validation Loss : 1.5805e-02

Training CC : 0.9568 | Validation CC : 0.9401

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.9267e-01 | Validation Loss : 8.7959e-01

Training F1 Macro: 0.9022 | Validation F1 Macro : 0.6232

Training F1 Micro: 0.9061 | Validation F1 Micro : 0.6280

Epoch 14, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 1.1952e-02 | Validation Loss : 1.6067e-02

Training CC : 0.9556 | Validation CC : 0.9391

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.1783e-01 | Validation Loss : 8.5367e-01

Training F1 Macro: 0.9383 | Validation F1 Macro : 0.6733

Training F1 Micro: 0.9424 | Validation F1 Micro : 0.6780

Epoch 15, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.1371e-02 | Validation Loss : 1.5021e-02

Training CC : 0.9578 | Validation CC : 0.9432

** Classification Losses **

Training Loss : 2.6352e-01 | Validation Loss : 8.3245e-01

Training F1 Macro: 0.8697 | Validation F1 Macro : 0.6602

Training F1 Micro: 0.8753 | Validation F1 Micro : 0.6640

Epoch 15, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.0914e-02 | Validation Loss : 1.4526e-02

Training CC : 0.9600 | Validation CC : 0.9452

** Classification Losses **

Training Loss : 1.6287e-01 | Validation Loss : 8.3996e-01

Training F1 Macro: 0.9177 | Validation F1 Macro : 0.6691

Training F1 Micro: 0.9348 | Validation F1 Micro : 0.6720

Epoch 15, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 1.0366e-02 | Validation Loss : 1.4301e-02

Training CC : 0.9622 | Validation CC : 0.9460

** Classification Losses **

Training Loss : 1.4815e-01 | Validation Loss : 8.3877e-01

Training F1 Macro: 0.9482 | Validation F1 Macro : 0.6721

Training F1 Micro: 0.9478 | Validation F1 Micro : 0.6740

Epoch 15, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 9.8331e-03 | Validation Loss : 1.3929e-02

Training CC : 0.9638 | Validation CC : 0.9474

** Classification Losses **

Training Loss : 9.2873e-02 | Validation Loss : 8.5900e-01

Training F1 Macro: 0.9573 | Validation F1 Macro : 0.6487

Training F1 Micro: 0.9590 | Validation F1 Micro : 0.6540

Epoch 15, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 9.3855e-03 | Validation Loss : 1.3776e-02

Training CC : 0.9654 | Validation CC : 0.9482

** Classification Losses **

Training Loss : 1.3484e-01 | Validation Loss : 8.4049e-01

Training F1 Macro: 0.9553 | Validation F1 Macro : 0.6748

Training F1 Micro: 0.9591 | Validation F1 Micro : 0.6760

Epoch 16, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 9.3653e-03 | Validation Loss : 1.3777e-02

Training CC : 0.9657 | Validation CC : 0.9482

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.3203e-01 | Validation Loss : 8.4416e-01

Training F1 Macro: 0.9486 | Validation F1 Macro : 0.6665

Training F1 Micro: 0.9518 | Validation F1 Micro : 0.6680

Epoch 16, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 9.3063e-03 | Validation Loss : 1.3871e-02

Training CC : 0.9657 | Validation CC : 0.9479

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.8428e-01 | Validation Loss : 8.5315e-01

Training F1 Macro: 0.9073 | Validation F1 Macro : 0.6563

Training F1 Micro: 0.9105 | Validation F1 Micro : 0.6580

Epoch 16, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 9.3482e-03 | Validation Loss : 1.4009e-02

Training CC : 0.9654 | Validation CC : 0.9473

** Classification Losses ** <---- Now Optimizing

Training Loss : 9.9010e-02 | Validation Loss : 8.7678e-01

Training F1 Macro: 0.9536 | Validation F1 Macro : 0.6413

Training F1 Micro: 0.9542 | Validation F1 Micro : 0.6440

Epoch 16, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 9.6327e-03 | Validation Loss : 1.4144e-02

Training CC : 0.9645 | Validation CC : 0.9468

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.2682e-01 | Validation Loss : 9.0067e-01

Training F1 Macro: 0.9485 | Validation F1 Macro : 0.6325

Training F1 Micro: 0.9587 | Validation F1 Micro : 0.6340

Epoch 16, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 9.7647e-03 | Validation Loss : 1.4251e-02

Training CC : 0.9640 | Validation CC : 0.9463

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.0845e-01 | Validation Loss : 8.6177e-01

Training F1 Macro: 0.9578 | Validation F1 Macro : 0.6502

Training F1 Micro: 0.9454 | Validation F1 Micro : 0.6540

Epoch 17, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 9.4497e-03 | Validation Loss : 1.3772e-02

Training CC : 0.9650 | Validation CC : 0.9481

** Classification Losses **

Training Loss : 1.6202e-01 | Validation Loss : 8.5356e-01

Training F1 Macro: 0.8868 | Validation F1 Macro : 0.6650

Training F1 Micro: 0.8992 | Validation F1 Micro : 0.6680

Epoch 17, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 9.2654e-03 | Validation Loss : 1.3456e-02

Training CC : 0.9662 | Validation CC : 0.9493

** Classification Losses **

Training Loss : 2.0396e-01 | Validation Loss : 8.3408e-01

Training F1 Macro: 0.9091 | Validation F1 Macro : 0.6467

Training F1 Micro: 0.9120 | Validation F1 Micro : 0.6500

Epoch 17, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 8.9745e-03 | Validation Loss : 1.3355e-02

Training CC : 0.9672 | Validation CC : 0.9497

** Classification Losses **

Training Loss : 1.3781e-01 | Validation Loss : 8.6047e-01

Training F1 Macro: 0.9214 | Validation F1 Macro : 0.6386

Training F1 Micro: 0.9383 | Validation F1 Micro : 0.6440

Epoch 17, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 8.8195e-03 | Validation Loss : 1.3154e-02

Training CC : 0.9679 | Validation CC : 0.9506

** Classification Losses **

Training Loss : 1.9023e-01 | Validation Loss : 8.4928e-01

Training F1 Macro: 0.8888 | Validation F1 Macro : 0.6667

Training F1 Micro: 0.9071 | Validation F1 Micro : 0.6680

Epoch 17, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 8.6157e-03 | Validation Loss : 1.3201e-02

Training CC : 0.9687 | Validation CC : 0.9505

** Classification Losses **

Training Loss : 1.4719e-01 | Validation Loss : 8.4225e-01

Training F1 Macro: 0.9113 | Validation F1 Macro : 0.6757

Training F1 Micro: 0.9200 | Validation F1 Micro : 0.6780

Epoch 18, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 8.3774e-03 | Validation Loss : 1.3181e-02

Training CC : 0.9693 | Validation CC : 0.9505

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.5271e-01 | Validation Loss : 8.3087e-01

Training F1 Macro: 0.9156 | Validation F1 Macro : 0.6696

Training F1 Micro: 0.9216 | Validation F1 Micro : 0.6720

Epoch 18, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 8.4135e-03 | Validation Loss : 1.3251e-02

Training CC : 0.9692 | Validation CC : 0.9503

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.7403e-01 | Validation Loss : 8.7227e-01

Training F1 Macro: 0.9213 | Validation F1 Macro : 0.6358

Training F1 Micro: 0.9188 | Validation F1 Micro : 0.6420

Epoch 18, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 8.5600e-03 | Validation Loss : 1.3404e-02

Training CC : 0.9687 | Validation CC : 0.9496

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.7112e-01 | Validation Loss : 8.8280e-01

Training F1 Macro: 0.9297 | Validation F1 Macro : 0.6452

Training F1 Micro: 0.9209 | Validation F1 Micro : 0.6460

Epoch 18, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 9.1894e-03 | Validation Loss : 1.3514e-02

Training CC : 0.9672 | Validation CC : 0.9492

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.0314e-01 | Validation Loss : 8.9198e-01

Training F1 Macro: 0.9431 | Validation F1 Macro : 0.6361

Training F1 Micro: 0.9462 | Validation F1 Micro : 0.6360

Epoch 18, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 8.7022e-03 | Validation Loss : 1.3546e-02

Training CC : 0.9680 | Validation CC : 0.9491

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.5245e-01 | Validation Loss : 8.7273e-01

Training F1 Macro: 0.9139 | Validation F1 Macro : 0.6438

Training F1 Micro: 0.9120 | Validation F1 Micro : 0.6460

Epoch 19, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 9.6351e-03 | Validation Loss : 1.3262e-02

Training CC : 0.9662 | Validation CC : 0.9502

** Classification Losses **

Training Loss : 1.4997e-01 | Validation Loss : 8.7745e-01

Training F1 Macro: 0.8983 | Validation F1 Macro : 0.6264

Training F1 Micro: 0.9022 | Validation F1 Micro : 0.6260

Epoch 19, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 8.8020e-03 | Validation Loss : 1.2972e-02

Training CC : 0.9688 | Validation CC : 0.9513

** Classification Losses **

Training Loss : 1.2232e-01 | Validation Loss : 8.5785e-01

Training F1 Macro: 0.9535 | Validation F1 Macro : 0.6637

Training F1 Micro: 0.9592 | Validation F1 Micro : 0.6680

Epoch 19, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 9.5769e-03 | Validation Loss : 1.2744e-02

Training CC : 0.9670 | Validation CC : 0.9524

** Classification Losses **

Training Loss : 1.3234e-01 | Validation Loss : 8.5989e-01

Training F1 Macro: 0.9310 | Validation F1 Macro : 0.6593

Training F1 Micro: 0.9431 | Validation F1 Micro : 0.6600

Epoch 19, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 7.9503e-03 | Validation Loss : 1.2637e-02

Training CC : 0.9710 | Validation CC : 0.9524

** Classification Losses **

Training Loss : 1.4379e-01 | Validation Loss : 8.5471e-01

Training F1 Macro: 0.9125 | Validation F1 Macro : 0.6611

Training F1 Micro: 0.9323 | Validation F1 Micro : 0.6620

Epoch 19, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 7.7567e-03 | Validation Loss : 1.2581e-02

Training CC : 0.9718 | Validation CC : 0.9532

** Classification Losses **

Training Loss : 1.3960e-01 | Validation Loss : 8.4192e-01

Training F1 Macro: 0.9126 | Validation F1 Macro : 0.6726

Training F1 Micro: 0.9318 | Validation F1 Micro : 0.6800

Epoch 20, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 7.4657e-03 | Validation Loss : 1.2658e-02

Training CC : 0.9726 | Validation CC : 0.9530

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.5172e-01 | Validation Loss : 8.6882e-01

Training F1 Macro: 0.9454 | Validation F1 Macro : 0.6556

Training F1 Micro: 0.9467 | Validation F1 Micro : 0.6560

Epoch 20, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 7.8149e-03 | Validation Loss : 1.2747e-02

Training CC : 0.9718 | Validation CC : 0.9526

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.2112e-01 | Validation Loss : 8.6369e-01

Training F1 Macro: 0.9362 | Validation F1 Macro : 0.6560

Training F1 Micro: 0.9397 | Validation F1 Micro : 0.6580

Epoch 20, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 7.7463e-03 | Validation Loss : 1.2798e-02

Training CC : 0.9718 | Validation CC : 0.9524

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.5293e-01 | Validation Loss : 8.5455e-01

Training F1 Macro: 0.9039 | Validation F1 Macro : 0.6618

Training F1 Micro: 0.9158 | Validation F1 Micro : 0.6640

Epoch 20, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 7.7043e-03 | Validation Loss : 1.2875e-02

Training CC : 0.9717 | Validation CC : 0.9521

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.6206e-01 | Validation Loss : 8.4736e-01

Training F1 Macro: 0.9281 | Validation F1 Macro : 0.6759

Training F1 Micro: 0.9342 | Validation F1 Micro : 0.6760

Epoch 20, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 8.2563e-03 | Validation Loss : 1.2997e-02

Training CC : 0.9705 | Validation CC : 0.9516

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.7141e-01 | Validation Loss : 8.5383e-01

Training F1 Macro: 0.9108 | Validation F1 Macro : 0.6694

Training F1 Micro: 0.9116 | Validation F1 Micro : 0.6700

Epoch 21, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 7.7102e-03 | Validation Loss : 1.2534e-02

Training CC : 0.9718 | Validation CC : 0.9529

** Classification Losses **

Training Loss : 1.6567e-01 | Validation Loss : 8.6571e-01

Training F1 Macro: 0.8981 | Validation F1 Macro : 0.6701

Training F1 Micro: 0.9118 | Validation F1 Micro : 0.6700

Epoch 21, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 7.2278e-03 | Validation Loss : 1.2528e-02

Training CC : 0.9734 | Validation CC : 0.9532

** Classification Losses **

Training Loss : 9.3937e-02 | Validation Loss : 8.4098e-01

Training F1 Macro: 0.9457 | Validation F1 Macro : 0.6727

Training F1 Micro: 0.9493 | Validation F1 Micro : 0.6760

Epoch 21, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 7.1630e-03 | Validation Loss : 1.2346e-02

Training CC : 0.9739 | Validation CC : 0.9541

** Classification Losses **

Training Loss : 1.0077e-01 | Validation Loss : 8.4972e-01

Training F1 Macro: 0.8803 | Validation F1 Macro : 0.6569

Training F1 Micro: 0.9387 | Validation F1 Micro : 0.6620

Epoch 21, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 7.8948e-03 | Validation Loss : 1.2502e-02

Training CC : 0.9726 | Validation CC : 0.9537

** Classification Losses **

Training Loss : 1.9061e-01 | Validation Loss : 8.4001e-01

Training F1 Macro: 0.9014 | Validation F1 Macro : 0.6726

Training F1 Micro: 0.9081 | Validation F1 Micro : 0.6760

Epoch 21, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 7.2557e-03 | Validation Loss : 1.2409e-02

Training CC : 0.9739 | Validation CC : 0.9534

** Classification Losses **

Training Loss : 1.6901e-01 | Validation Loss : 8.6209e-01

Training F1 Macro: 0.9469 | Validation F1 Macro : 0.6626

Training F1 Micro: 0.9406 | Validation F1 Micro : 0.6640

Epoch 22, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 7.2670e-03 | Validation Loss : 1.2509e-02

Training CC : 0.9736 | Validation CC : 0.9530

** Classification Losses ** <---- Now Optimizing

Training Loss : 2.3909e-01 | Validation Loss : 8.3192e-01

Training F1 Macro: 0.8593 | Validation F1 Macro : 0.6702

Training F1 Micro: 0.8630 | Validation F1 Micro : 0.6720

Epoch 22, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 7.9881e-03 | Validation Loss : 1.2611e-02

Training CC : 0.9720 | Validation CC : 0.9526

** Classification Losses ** <---- Now Optimizing

Training Loss : 9.9960e-02 | Validation Loss : 8.4697e-01

Training F1 Macro: 0.9484 | Validation F1 Macro : 0.6510

Training F1 Micro: 0.9462 | Validation F1 Micro : 0.6560

Epoch 22, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 7.2603e-03 | Validation Loss : 1.2619e-02

Training CC : 0.9734 | Validation CC : 0.9526

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.0235e-01 | Validation Loss : 8.7750e-01

Training F1 Macro: 0.9672 | Validation F1 Macro : 0.6185

Training F1 Micro: 0.9737 | Validation F1 Micro : 0.6160

Epoch 22, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 7.4607e-03 | Validation Loss : 1.2630e-02

Training CC : 0.9730 | Validation CC : 0.9525

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.3184e-01 | Validation Loss : 8.5133e-01

Training F1 Macro: 0.9854 | Validation F1 Macro : 0.6330

Training F1 Micro: 0.9831 | Validation F1 Micro : 0.6360

Epoch 22, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 7.2900e-03 | Validation Loss : 1.2654e-02

Training CC : 0.9733 | Validation CC : 0.9524

** Classification Losses ** <---- Now Optimizing

Training Loss : 9.0637e-02 | Validation Loss : 8.5801e-01

Training F1 Macro: 0.9771 | Validation F1 Macro : 0.6579

Training F1 Micro: 0.9715 | Validation F1 Micro : 0.6640

Epoch 23, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 7.4450e-03 | Validation Loss : 1.2751e-02

Training CC : 0.9733 | Validation CC : 0.9533

** Classification Losses **

Training Loss : 1.0397e-01 | Validation Loss : 8.5559e-01

Training F1 Macro: 0.9489 | Validation F1 Macro : 0.6641

Training F1 Micro: 0.9584 | Validation F1 Micro : 0.6660

Epoch 23, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 7.9086e-03 | Validation Loss : 1.2338e-02

Training CC : 0.9726 | Validation CC : 0.9536

** Classification Losses **

Training Loss : 1.5325e-01 | Validation Loss : 8.3072e-01

Training F1 Macro: 0.9345 | Validation F1 Macro : 0.6725

Training F1 Micro: 0.9393 | Validation F1 Micro : 0.6720

Epoch 23, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.9315e-03 | Validation Loss : 1.2355e-02

Training CC : 0.9745 | Validation CC : 0.9542

** Classification Losses **

Training Loss : 8.7520e-02 | Validation Loss : 8.5744e-01

Training F1 Macro: 0.9515 | Validation F1 Macro : 0.6702

Training F1 Micro: 0.9689 | Validation F1 Micro : 0.6700

Epoch 23, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.6386e-03 | Validation Loss : 1.1937e-02

Training CC : 0.9755 | Validation CC : 0.9556

** Classification Losses **

Training Loss : 2.3212e-01 | Validation Loss : 8.3361e-01

Training F1 Macro: 0.8921 | Validation F1 Macro : 0.6773

Training F1 Micro: 0.8836 | Validation F1 Micro : 0.6800

Epoch 23, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.8403e-03 | Validation Loss : 1.1840e-02

Training CC : 0.9755 | Validation CC : 0.9557

** Classification Losses **

Training Loss : 1.4800e-01 | Validation Loss : 8.3969e-01

Training F1 Macro: 0.9263 | Validation F1 Macro : 0.6680

Training F1 Micro: 0.9285 | Validation F1 Micro : 0.6720

Epoch 24, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 6.3760e-03 | Validation Loss : 1.1916e-02

Training CC : 0.9767 | Validation CC : 0.9554

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.9337e-01 | Validation Loss : 8.5417e-01

Training F1 Macro: 0.8829 | Validation F1 Macro : 0.6304

Training F1 Micro: 0.8798 | Validation F1 Micro : 0.6320

Epoch 24, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 6.8312e-03 | Validation Loss : 1.2025e-02

Training CC : 0.9756 | Validation CC : 0.9549

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.3159e-01 | Validation Loss : 8.4960e-01

Training F1 Macro: 0.9314 | Validation F1 Macro : 0.6426

Training F1 Micro: 0.9403 | Validation F1 Micro : 0.6440

Epoch 24, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 6.6599e-03 | Validation Loss : 1.2106e-02

Training CC : 0.9757 | Validation CC : 0.9546

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.2548e-01 | Validation Loss : 8.6852e-01

Training F1 Macro: 0.9326 | Validation F1 Macro : 0.6410

Training F1 Micro: 0.9348 | Validation F1 Micro : 0.6400

Epoch 24, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 7.0657e-03 | Validation Loss : 1.2199e-02

Training CC : 0.9748 | Validation CC : 0.9543

** Classification Losses ** <---- Now Optimizing

Training Loss : 8.6306e-02 | Validation Loss : 8.7201e-01

Training F1 Macro: 0.9516 | Validation F1 Macro : 0.6488

Training F1 Micro: 0.9573 | Validation F1 Micro : 0.6500

Epoch 24, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 6.9443e-03 | Validation Loss : 1.2281e-02

Training CC : 0.9748 | Validation CC : 0.9539

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.5603e-01 | Validation Loss : 8.5981e-01

Training F1 Macro: 0.8968 | Validation F1 Macro : 0.6425

Training F1 Micro: 0.9048 | Validation F1 Micro : 0.6460

Epoch 25, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.7960e-03 | Validation Loss : 1.1807e-02

Training CC : 0.9755 | Validation CC : 0.9558

** Classification Losses **

Training Loss : 1.8760e-01 | Validation Loss : 8.3187e-01

Training F1 Macro: 0.9100 | Validation F1 Macro : 0.6752

Training F1 Micro: 0.9080 | Validation F1 Micro : 0.6780

Epoch 25, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.2898e-03 | Validation Loss : 1.1782e-02

Training CC : 0.9770 | Validation CC : 0.9563

** Classification Losses **

Training Loss : 1.7058e-01 | Validation Loss : 8.4101e-01

Training F1 Macro: 0.9096 | Validation F1 Macro : 0.6540

Training F1 Micro: 0.9099 | Validation F1 Micro : 0.6560

Epoch 25, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.2050e-03 | Validation Loss : 1.1777e-02

Training CC : 0.9775 | Validation CC : 0.9561

** Classification Losses **

Training Loss : 1.3243e-01 | Validation Loss : 8.4678e-01

Training F1 Macro: 0.9281 | Validation F1 Macro : 0.6581

Training F1 Micro: 0.9244 | Validation F1 Micro : 0.6620

Epoch 25, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.2643e-03 | Validation Loss : 1.1752e-02

Training CC : 0.9775 | Validation CC : 0.9563

** Classification Losses **

Training Loss : 1.6075e-01 | Validation Loss : 8.5280e-01

Training F1 Macro: 0.8845 | Validation F1 Macro : 0.6536

Training F1 Micro: 0.9310 | Validation F1 Micro : 0.6580

Epoch 25, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 7.6090e-03 | Validation Loss : 1.1833e-02

Training CC : 0.9741 | Validation CC : 0.9565

** Classification Losses **

Training Loss : 1.7908e-01 | Validation Loss : 8.3545e-01

Training F1 Macro: 0.9072 | Validation F1 Macro : 0.6727

Training F1 Micro: 0.9045 | Validation F1 Micro : 0.6740

Epoch 26, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 6.5355e-03 | Validation Loss : 1.1902e-02

Training CC : 0.9769 | Validation CC : 0.9562

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.3773e-01 | Validation Loss : 8.6412e-01

Training F1 Macro: 0.9130 | Validation F1 Macro : 0.6296

Training F1 Micro: 0.9073 | Validation F1 Micro : 0.6260

Epoch 26, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 6.6034e-03 | Validation Loss : 1.2097e-02

Training CC : 0.9767 | Validation CC : 0.9555

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.8693e-01 | Validation Loss : 8.2975e-01

Training F1 Macro: 0.8809 | Validation F1 Macro : 0.6717

Training F1 Micro: 0.9176 | Validation F1 Micro : 0.6760

Epoch 26, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 6.9234e-03 | Validation Loss : 1.2210e-02

Training CC : 0.9757 | Validation CC : 0.9551

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.3756e-01 | Validation Loss : 8.4827e-01

Training F1 Macro: 0.9289 | Validation F1 Macro : 0.6630

Training F1 Micro: 0.9199 | Validation F1 Micro : 0.6680

Epoch 26, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 6.7050e-03 | Validation Loss : 1.2251e-02

Training CC : 0.9759 | Validation CC : 0.9549

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.0720e-01 | Validation Loss : 8.4041e-01

Training F1 Macro: 0.9053 | Validation F1 Macro : 0.6773

Training F1 Micro: 0.9407 | Validation F1 Micro : 0.6780

Epoch 26, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 6.9454e-03 | Validation Loss : 1.2291e-02

Training CC : 0.9754 | Validation CC : 0.9548

** Classification Losses ** <---- Now Optimizing

Training Loss : 2.0907e-01 | Validation Loss : 8.7245e-01

Training F1 Macro: 0.8698 | Validation F1 Macro : 0.6428

Training F1 Micro: 0.8692 | Validation F1 Micro : 0.6420

Epoch 27, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.5478e-03 | Validation Loss : 1.1961e-02

Training CC : 0.9762 | Validation CC : 0.9550

** Classification Losses **

Training Loss : 1.7095e-01 | Validation Loss : 8.2854e-01

Training F1 Macro: 0.8678 | Validation F1 Macro : 0.6808

Training F1 Micro: 0.9182 | Validation F1 Micro : 0.6840

Epoch 27, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.6915e-03 | Validation Loss : 1.2062e-02

Training CC : 0.9763 | Validation CC : 0.9557

** Classification Losses **

Training Loss : 1.8538e-01 | Validation Loss : 8.0424e-01

Training F1 Macro: 0.9061 | Validation F1 Macro : 0.6884

Training F1 Micro: 0.9211 | Validation F1 Micro : 0.6920

Epoch 27, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.1259e-03 | Validation Loss : 1.1760e-02

Training CC : 0.9776 | Validation CC : 0.9561

** Classification Losses **

Training Loss : 1.6386e-01 | Validation Loss : 8.1270e-01

Training F1 Macro: 0.9253 | Validation F1 Macro : 0.6897

Training F1 Micro: 0.9241 | Validation F1 Micro : 0.6900

Epoch 27, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 5.9430e-03 | Validation Loss : 1.1558e-02

Training CC : 0.9784 | Validation CC : 0.9572

** Classification Losses **

Training Loss : 1.1993e-01 | Validation Loss : 8.1793e-01

Training F1 Macro: 0.9353 | Validation F1 Macro : 0.6836

Training F1 Micro: 0.9362 | Validation F1 Micro : 0.6860

Epoch 27, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 5.6655e-03 | Validation Loss : 1.1323e-02

Training CC : 0.9791 | Validation CC : 0.9578

** Classification Losses **

Training Loss : 1.8439e-01 | Validation Loss : 8.0809e-01

Training F1 Macro: 0.8148 | Validation F1 Macro : 0.6776

Training F1 Micro: 0.8995 | Validation F1 Micro : 0.6780

Epoch 28, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 5.7710e-03 | Validation Loss : 1.1375e-02

Training CC : 0.9793 | Validation CC : 0.9576

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.6920e-01 | Validation Loss : 8.1023e-01

Training F1 Macro: 0.9095 | Validation F1 Macro : 0.6824

Training F1 Micro: 0.9059 | Validation F1 Micro : 0.6820

Epoch 28, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 5.6112e-03 | Validation Loss : 1.1456e-02

Training CC : 0.9795 | Validation CC : 0.9573

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.8149e-01 | Validation Loss : 8.0933e-01

Training F1 Macro: 0.9325 | Validation F1 Macro : 0.6672

Training F1 Micro: 0.9297 | Validation F1 Micro : 0.6660

Epoch 28, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 5.7544e-03 | Validation Loss : 1.1528e-02

Training CC : 0.9791 | Validation CC : 0.9570

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.6036e-01 | Validation Loss : 8.2073e-01

Training F1 Macro: 0.9014 | Validation F1 Macro : 0.6646

Training F1 Micro: 0.9183 | Validation F1 Micro : 0.6640

Epoch 28, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 5.7814e-03 | Validation Loss : 1.1598e-02

Training CC : 0.9789 | Validation CC : 0.9568

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.7690e-01 | Validation Loss : 8.3923e-01

Training F1 Macro: 0.9221 | Validation F1 Macro : 0.6722

Training F1 Micro: 0.9181 | Validation F1 Micro : 0.6720

Epoch 28, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 6.0055e-03 | Validation Loss : 1.1664e-02

Training CC : 0.9784 | Validation CC : 0.9565

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.1044e-01 | Validation Loss : 8.5828e-01

Training F1 Macro: 0.9339 | Validation F1 Macro : 0.6266

Training F1 Micro: 0.9365 | Validation F1 Micro : 0.6260

Epoch 29, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.0260e-03 | Validation Loss : 1.1496e-02

Training CC : 0.9786 | Validation CC : 0.9571

** Classification Losses **

Training Loss : 2.1454e-01 | Validation Loss : 8.4726e-01

Training F1 Macro: 0.8673 | Validation F1 Macro : 0.6535

Training F1 Micro: 0.8693 | Validation F1 Micro : 0.6520

Epoch 29, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 5.7346e-03 | Validation Loss : 1.1429e-02

Training CC : 0.9793 | Validation CC : 0.9577

** Classification Losses **

Training Loss : 1.9326e-01 | Validation Loss : 8.1522e-01

Training F1 Macro: 0.8950 | Validation F1 Macro : 0.6816

Training F1 Micro: 0.8892 | Validation F1 Micro : 0.6820

Epoch 29, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.6571e-03 | Validation Loss : 1.1586e-02

Training CC : 0.9776 | Validation CC : 0.9571

** Classification Losses **

Training Loss : 1.5835e-01 | Validation Loss : 8.4449e-01

Training F1 Macro: 0.9246 | Validation F1 Macro : 0.6684

Training F1 Micro: 0.9187 | Validation F1 Micro : 0.6680

Epoch 29, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.0160e-03 | Validation Loss : 1.1712e-02

Training CC : 0.9786 | Validation CC : 0.9570

** Classification Losses **

Training Loss : 1.3173e-01 | Validation Loss : 8.4895e-01

Training F1 Macro: 0.9461 | Validation F1 Macro : 0.6545

Training F1 Micro: 0.9376 | Validation F1 Micro : 0.6540

Epoch 29, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.0046e-03 | Validation Loss : 1.1446e-02

Training CC : 0.9786 | Validation CC : 0.9573

** Classification Losses **

Training Loss : 2.1249e-01 | Validation Loss : 8.2564e-01

Training F1 Macro: 0.8971 | Validation F1 Macro : 0.6800

Training F1 Micro: 0.8965 | Validation F1 Micro : 0.6800

Epoch 30, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 5.5131e-03 | Validation Loss : 1.1506e-02

Training CC : 0.9798 | Validation CC : 0.9571

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.1722e-01 | Validation Loss : 8.5023e-01

Training F1 Macro: 0.9470 | Validation F1 Macro : 0.6554

Training F1 Micro: 0.9469 | Validation F1 Micro : 0.6560

Epoch 30, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 6.1789e-03 | Validation Loss : 1.1620e-02

Training CC : 0.9784 | Validation CC : 0.9566

** Classification Losses ** <---- Now Optimizing

Training Loss : 9.3728e-02 | Validation Loss : 8.6545e-01

Training F1 Macro: 0.9165 | Validation F1 Macro : 0.6456

Training F1 Micro: 0.9627 | Validation F1 Micro : 0.6460

Epoch 30, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 5.9624e-03 | Validation Loss : 1.1678e-02

Training CC : 0.9787 | Validation CC : 0.9564

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.3745e-01 | Validation Loss : 8.4971e-01

Training F1 Macro: 0.9098 | Validation F1 Macro : 0.6606

Training F1 Micro: 0.9183 | Validation F1 Micro : 0.6580

Epoch 30, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 5.7588e-03 | Validation Loss : 1.1750e-02

Training CC : 0.9790 | Validation CC : 0.9561

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.2439e-01 | Validation Loss : 8.3425e-01

Training F1 Macro: 0.9499 | Validation F1 Macro : 0.6723

Training F1 Micro: 0.9469 | Validation F1 Micro : 0.6720

Epoch 30, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 6.0904e-03 | Validation Loss : 1.1768e-02

Training CC : 0.9783 | Validation CC : 0.9560

** Classification Losses ** <---- Now Optimizing

Training Loss : 1.5059e-01 | Validation Loss : 8.3959e-01

Training F1 Macro: 0.9306 | Validation F1 Macro : 0.6670

Training F1 Micro: 0.9508 | Validation F1 Micro : 0.6680

Epoch 31, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 5.8120e-03 | Validation Loss : 1.1515e-02

Training CC : 0.9789 | Validation CC : 0.9570

** Classification Losses **

Training Loss : 1.4424e-01 | Validation Loss : 8.4361e-01

Training F1 Macro: 0.9326 | Validation F1 Macro : 0.6708

Training F1 Micro: 0.9301 | Validation F1 Micro : 0.6700

Epoch 31, of 50 >-*-< Mini Epoch 2 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 5.6793e-03 | Validation Loss : 1.1356e-02

Training CC : 0.9798 | Validation CC : 0.9579

** Classification Losses **

Training Loss : 1.1209e-01 | Validation Loss : 8.2991e-01

Training F1 Macro: 0.9419 | Validation F1 Macro : 0.6449

Training F1 Micro: 0.9523 | Validation F1 Micro : 0.6460

Epoch 31, of 50 >-*-< Mini Epoch 3 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 5.6743e-03 | Validation Loss : 1.1059e-02

Training CC : 0.9799 | Validation CC : 0.9589

** Classification Losses **

Training Loss : 1.5746e-01 | Validation Loss : 8.3515e-01

Training F1 Macro: 0.9225 | Validation F1 Macro : 0.6828

Training F1 Micro: 0.9186 | Validation F1 Micro : 0.6840

Epoch 31, of 50 >-*-< Mini Epoch 4 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.2405e-03 | Validation Loss : 1.0944e-02

Training CC : 0.9792 | Validation CC : 0.9588

** Classification Losses **

Training Loss : 1.5113e-01 | Validation Loss : 8.4694e-01

Training F1 Macro: 0.9277 | Validation F1 Macro : 0.6555

Training F1 Micro: 0.9297 | Validation F1 Micro : 0.6540

Epoch 31, of 50 >-*-< Mini Epoch 5 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses ** <---- Now Optimizing

Training Loss : 6.0254e-03 | Validation Loss : 1.1388e-02

Training CC : 0.9794 | Validation CC : 0.9580

** Classification Losses **

Training Loss : 1.9788e-01 | Validation Loss : 8.5736e-01

Training F1 Macro: 0.9060 | Validation F1 Macro : 0.6525

Training F1 Micro: 0.9128 | Validation F1 Micro : 0.6520

Epoch 32, of 50 >-*-< Mini Epoch 1 of 5 >-*-< Learning rate 1.000e-03

** Autoencoding Losses **

Training Loss : 5.3601e-03 | Validation Loss : 1.1427e-02

Training CC : 0.9805 | Validation CC : 0.9579

** Classification Losses ** <---- Now Optimizing

Training Loss : 2.0559e-01 | Validation Loss : 8.6597e-01